(2013)

Dynamical systems that maximize their future possibilities.

|

The second law of thermodynamics—the one that says entropy can only increase—dictates that a complex system always evolves toward greater disorderliness in the way internal components arrange themselves. In Physical Review Letters, two researchers explore a mathematical extension of this principle that focuses not on the arrangements that the system can reach now, but on those that will become accessible in the future. They argue that simple mechanical systems that are postulated to follow this rule show features of “intelligence,” hinting at a connection between this most-human attribute and fundamental physical laws. Entropy measures the number of internal arrangements of a system that result in the same outward appearance. Entropy rises because, for statistical reasons, a system evolves toward states that have many internal arrangements. A variety of previous research has provided “lots of hints that there’s some sort of association between intelligence and entropy maximization,” says Alex Wissner-Gross of Harvard University and the Massachusetts Institute of Technology (MIT). On the grandest scale, for example, theorists have argued that choosing possible universes that create the most entropy favors cosmological models that allow the emergence of intelligent observers [1]. Hoping to firm up such notions, Wissner-Gross teamed up with Cameron Freer of the University of Hawaii at Manoa to propose a “causal path entropy.” This entropy is based not on the internal arrangements accessible to a system at any moment, but on the number of arrangements it could pass through on the way to possible future states. They then calculated a “causal entropic force” that pushes the system to evolve so as to increase this modified entropy. This hypothetical force is analogous to the pressure that a gas-filled compartment exerts on a piston separating it from a nearly evacuated compartment. In this example, the force arises because the piston’s motion increases the entropy of the filled compartment more than it reduces that of the nearly empty one.

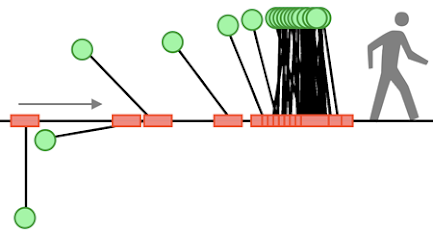

In contrast with the usual entropy, no known fundamental law stipulates that this future-looking entropic force governs how a system evolves. But as a thought experiment, the researchers simulated the behavior of simple mechanical systems that included the force, and the effects were profound. For example, a particle wandering in a box did not explore the volume randomly but found its way to the center, where it was best positioned to move anywhere in the box. Another simulation tracked the motion of a rigid pendulum hanging from a pivot that could slide back and forth horizontally. The pendulum eventually moved into an inverted configuration, which is unstable without the modified entropic force. From this upside-down position, the researchers argue, the pendulum can most easily explore all other possible positions.

The researchers interpreted this and other behaviors as indications of a rudimentary adaptive intelligence, in that the systems moved toward configurations that maximized their ability to respond to further changes. Wissner-Gross acknowledges that “there’s no widely agreed-upon definition of what intelligence actually is,” but he says that social scientists have speculated that certain skills prospered during evolution because they allowed humans to exploit ecological opportunities. In that vein, the researchers connect the inverted pendulum’s mechanical “versatility” to the abilities that bipeds like us require in order to make the numerous on-the-fly adjustments needed to stay balanced while walking.

Wissner-Gross and Freer simulated other idealized tasks that mimic standard animal intelligence tests. They emulated “tool use” with a model in which a large disk can gain access to a trapped disk by hitting it with a third disk. Another task, “social cooperation,” required two disks to coordinate their motions. In both cases, the simulated response to the modified entropic force achieved these goals without any further guidance. These two behaviors, along with grammar-driven language, which Wissner-Gross says he also replicated, have been invoked by Harvard’s Stephen Pinker as characteristic of the human “cognitive niche.” “We were quite startled by all of this,” Wissner-Gross says.

Previously, computer scientists have used a version of causal entropy to guide algorithms that adapt to continually updated information. The new formulation is not meant to be a literal model of the development of intelligence, but it points toward a “general thermodynamic picture of what intelligent behavior is,” Wissner-Gross says. The paper provides an “intriguing new insight into the physics of intelligence,” agrees Max Tegmark of MIT, who was not involved in the work. “It’s impressive to see such sophisticated behavior spontaneously emerge from such a simple physical process.”

–Don Monroe

physics.aps.org comment: I mentioned the same in a post here (facebook,) in poor Italian, a bit over a year ago, that consciousness might be partially describable as an emergent property of entropy. On reading the MIT study, I commented - in the group page post - basically the same. A moderator indirectly accused me of hubris. Which is kind of silly, and ironic. It's about the stuff, not personalities or likes. The indirect slight involved the concept of entropy and intelligent systems. At MIT over the past week a study emerged that implies the former might be used in part to describe the later, including aspects of us. (See the link). It's likely that many have had the same or similar thought. In fact, if you see some things a certain way - change the concept of intelligent systems to consciousness, imagine that it is inevitably a plural concept (getting rid of an observer remains awfully tricky when trying to delineate consciousness into a definition) - defining singular or a particular kind of consciousness then effectively implies interrupting that infinite regression. Necessarily so - so it can lead to other things further along instead of remianing stuck in that rabbit hole. Other things involving systems of integrated information and time and maybe some odd aspects of the two, maybe other stuff - the why, or the first why's (fundamental interactions leading to emergence and complexity.) But that would take too long while my lunch lentils are almost cooked. Anyway, if you breath in deeply enough you can catch the odor...it's already in the air. Not the beans. The direction and implications of the rapidly increasing data from the fields of cogsci and other places. If you can't smell it, try again. Or check to see if your nose is .... open. ..

|

Comments

Post a Comment